Introduction

Modern software systems need to be able to quickly and reliably handle large amounts of data all the time. Cloud platforms and database engines can slow down if they don’t handle input and output well. The CPU being slow has nothing to do with it.

In this case, advanced I/O models are crucial. A system that can read and write more than one thing at a time is much faster, more responsive, and able to grow. This article talks about what concurrent I/O is, how it works, and how it affects programs that need a lot of it in the real world. It does its job in a way that is easy for both students and professionals to get.

What does “concurrent I/O” mean

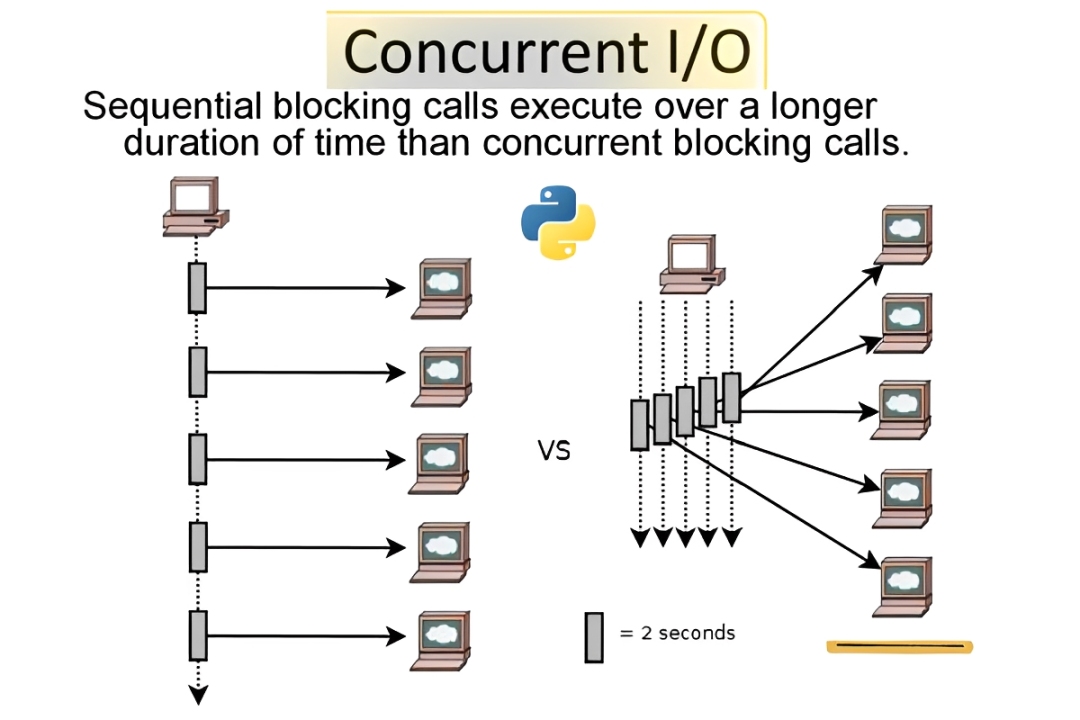

Concurrent I/O means that a system can handle more than one input and output operation at once without stopping the main flow of execution. Apps can keep doing other things while they wait for one task to finish. This makes their jobs easier.

This is how most operating systems, database management systems, and network servers work when speed is very important. Two ways that modern software makes better use of hardware resources like CPUs, storage devices, and network interfaces are asynchronous processing and parallel execution.

Why High-Demand Applications Struggle

One of the main ways that traditional I/O models work is by doing things one at a time. This means that the current event must finish before the next one can begin. This design keeps the CPU still, which slows down response times, especially when there is a lot of work to do.

Web servers and real-time analytics platforms are two examples of apps that are very popular and always need a lot of data. Blocking I/O models make it hard to scale, but non-blocking and asynchronous methods let systems handle thousands of requests at once without any problems.

Core Principles That Enable Concurrent Execution

Some of the most important ideas behind concurrent I/O execution are parallelism, non-blocking operations, and event-driven processing. These systems make sure that work can keep going without having to stop.

Things that cause things to happen are

- Calls to the system that come in at different times

- People who work with lines and threads in pools

- Things that have loops and callbacks

- Making good plans for what to do

These rules work together to make sure that apps can handle a lot of users and traffic at once.

High-Load Throughput via Concurrent I/O

Concurrent I/O uses system resources better because it does both computation and data transfer at the same time, which cuts down on idle time. This is the best way for apps to read and write data to disks, send and receive data over the internet, or keep data in the cloud.

Systems don’t do everything at once; they do things over time and spread them out. This speeds up the response time and keeps performance steady even when there are a lot of requests at once.

Operating System Mechanisms

Kernel-level improvements let operating systems handle more than one I/O request at a time. Asynchronous I/O APIs, multi-threading, and processing that is driven by interrupts are some things that can help you get things done.

Modern OS kernels also make it easier to switch between different contexts and work with buffers. The system is now more stable, and the data is safer because of these changes. They also help you finish a lot of things at once.

Concurrent I/O vs Sequential Processing

Concurrent and sequential models are different because of how they use resources and run. Sequential processing can only do one thing at a time. On the other hand, concurrent models let things happen at the same time.

| Feature | Sequential I/O | Concurrent I/O |

| Execution Flow | Linear | Parallel / Asynchronous |

| CPU Utilization | Low during waits | High and efficient |

| Scalability | Limited | Highly scalable |

| Latency | Higher | Significantly reduced |

This comparison clearly shows why modern applications favor concurrency-driven architectures.

Role in Scalable Application Design

Systems that can grow don’t get slower as they do. Concurrent I/O lets apps handle more traffic by spreading out the work and using system resources wisely.

Microservices, cloud-native platforms, and distributed systems all need this design pattern. You can add more servers without changing how long it takes to respond.

Dealing with resource contention

Concurrency can speed things up, but if you don’t do it right, it can also break things. Processes may become stuck if they attempt to use the disk, memory, or network simultaneously.

Here are some of the best ways to get things done:

- Making sure the threads fit together right

- Setting a speed limit and balancing the load

- How to use buffers the right way

- Looking into how resources are used

These plans keep things stable and make the most of concurrency at the same time.

Performance Optimization Strategies

Planning and testing all the time is the best way to get good results. When developers are working on I/O that happens at the same time, they often use caching, batching, and data structures that are better for performance.

Two important ways to make things better are to use

- APIs that run in the background and frameworks

- I/O that doesn’t block.

- How to properly take care of lines

- Making profiles and seeing how well people do when they compete against each other

These methods make sure that performance keeps getting better as time goes on.

Measuring Performance Gains

You can tell if performance has improved by looking at reliable metrics like latency, throughput, and resource use. With benchmarking tools, you can see how a system works before and after you add concurrency.

Knowing how to measure things allows you to base decisions on the information. This ensures that the concurrency strategies match the application’s needs and the infrastructure’s capacity.

Frequently Asked Question

1. Is it good for all programs to do I/O at the same time?

It works well for programs that need a lot of data and have a lot of load, but systems with low traffic may not need it.

2. Does concurrent I/O make the system harder to work with?

Yes, but the extra work is usually worth it because it speeds things up and makes them work better.

3. How does concurrent I/O affect the amount of CPU power that is used?

It speeds up I/O operations, which makes the CPU work better.

4. Is multi-threading the same thing as concurrent I/O?

No, multi-threading is one way to get concurrency, but asynchronous models can also do concurrent I/O with just one thread.

5. Can concurrent I/O make cloud apps run faster?

Of course, yes. People often use it in cloud-native and distributed systems to make sure they can grow and have low latency.

Conclusion

Concurrent I/O is a key part of modern software architecture because it lets programs handle heavy loads quickly and reliably. Concurrent I/O addresses numerous speed issues in modern systems by eliminating blocking operations and optimizing hardware utilization.

People who make apps that can grow, respond quickly, and be ready for the future need to learn how to use concurrent I/O right now.

For more, read: Integrated Solutions Console: Theoretical Foundations and Technologies